Generative AI-based application development 101

Generative AI and Large Language Models (LLMs) are about much more than flashy chatbots and deepfakes. Among other things, they can make application development easier and more efficient. As AI gets more popular, using LLMs to automate tasks is becoming an essential skill.

This article will introduce key concepts and describe successful methods for using LLMs to create automated applications. By applying the patterns discussed here, developers can make their LLM-based apps more efficient and reliable.

All images in this article, except where otherwise indicated, are created by Aleksandr Seleznev.

About the author

I'm Aleksandr Seleznev, Chief Architect at Luxoft, and have been specializing in modernization projects for more than five years. My team focuses on diverse code conversion and generation tasks, from migrating Java frameworks to transforming 50+-year-old COBOL applications into stateless Kubernetes services in the cloud.

LLM failures

There are many cases where today’s LLMs fail to provide reliable and factional correct output. Below are the three main issues our team encounters while developing LLM-based applications:

- Data cutoff — Models can’t provide information about events that took place after the training data was collected

- Hallucinations — Models sometimes create factually incorrect text, especially when a prompt is phrased in a tricky way

- Inconsistency — Given the stochastic nature of LLM output, when certain types of prompts are repeated, the model will generate different results

Solution patterns

Below, we’ll look at ways our team at Luxoft deals with these problems when using generative AI to accelerate code conversion. For more detailed theoretical descriptions, visit promptingguide.ai.

Demo application

My examples below will refer to ASM Converter, an application that helps convert mainframe utilities written in assembler to Linux Java CLI programs. These utilities resemble common Linux tools (like sort or wget) but cater to specific mainframe use cases.

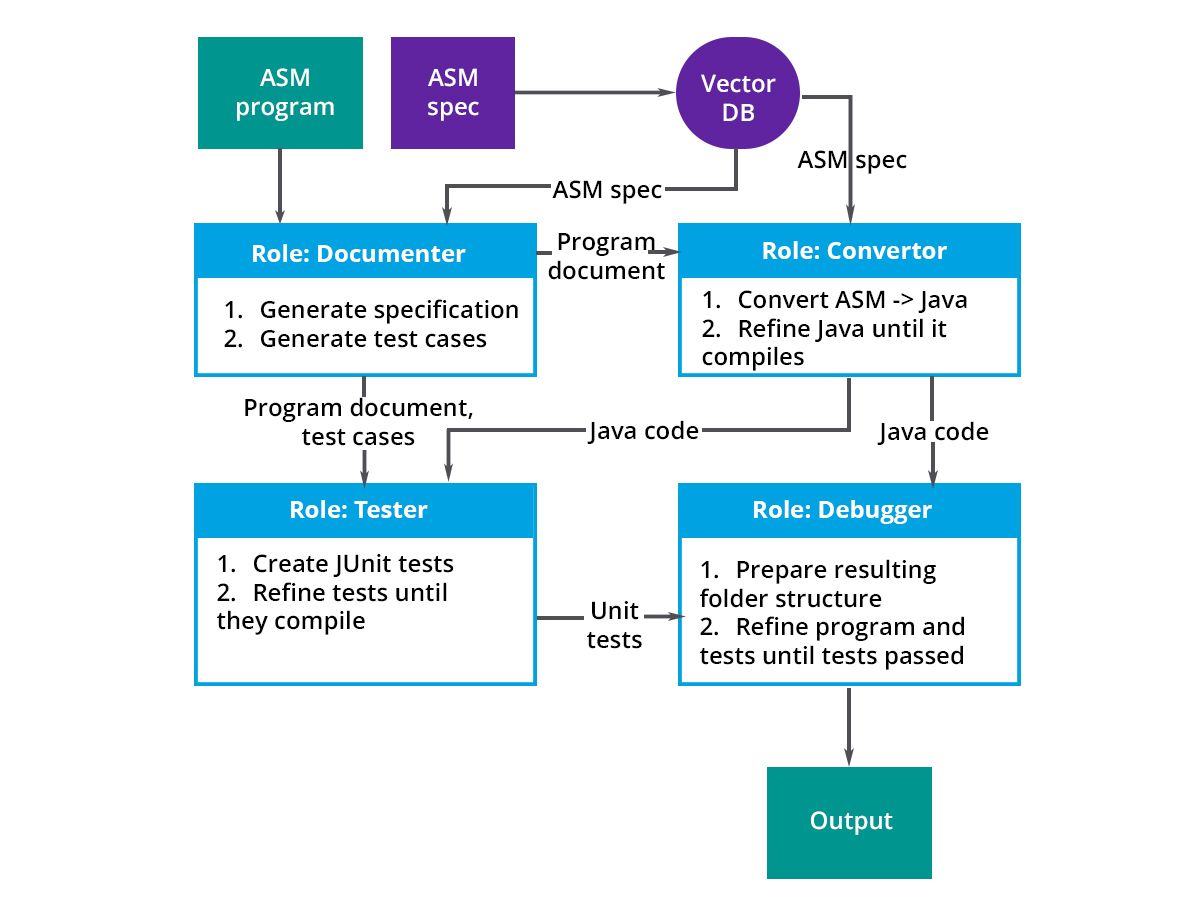

Here’s ASM Converter’s basic flow:

The conversion is performed by an automated pipeline combined with four independent “software agents”:

- Documenter — generates requirements, specifications and test cases

- Converter — generates Java; makes sure Java is compilable

- Tester — generates JUnit tests; makes sure tests are compilable

- Debugger — executes JUnit tests; makes sure all tests pass by fixing Java and tests

ASM Converter is written in Python with Langchain and Langgraph frameworks and is closed-source (hence the absence of a GitHub link).

Now let’s look at some solution patterns with examples from ASM Converter.

Improving output for single prompts

In my experience, only 10% of LLM prompts with the naive approach of “request -> response” produce acceptable results. However, knowing patterns for creating proper prompts significantly increases output quality.

System prompts

A system prompt is a part of a prompt that’s hidden from the user. It is often used to control the output within chat applications.

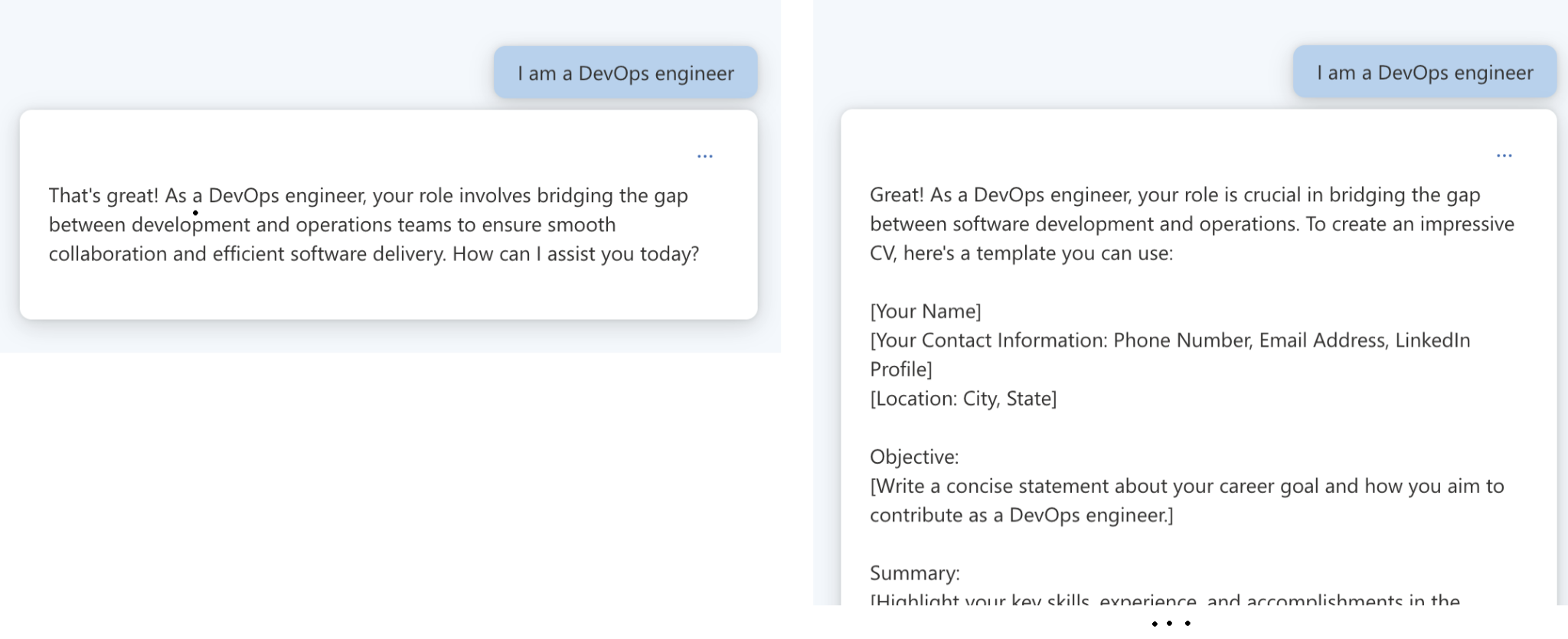

For example, here is a comparison of outputs to the same user prompt with no system prompt (left) and with a system prompt requesting that the model help create a CV (right):

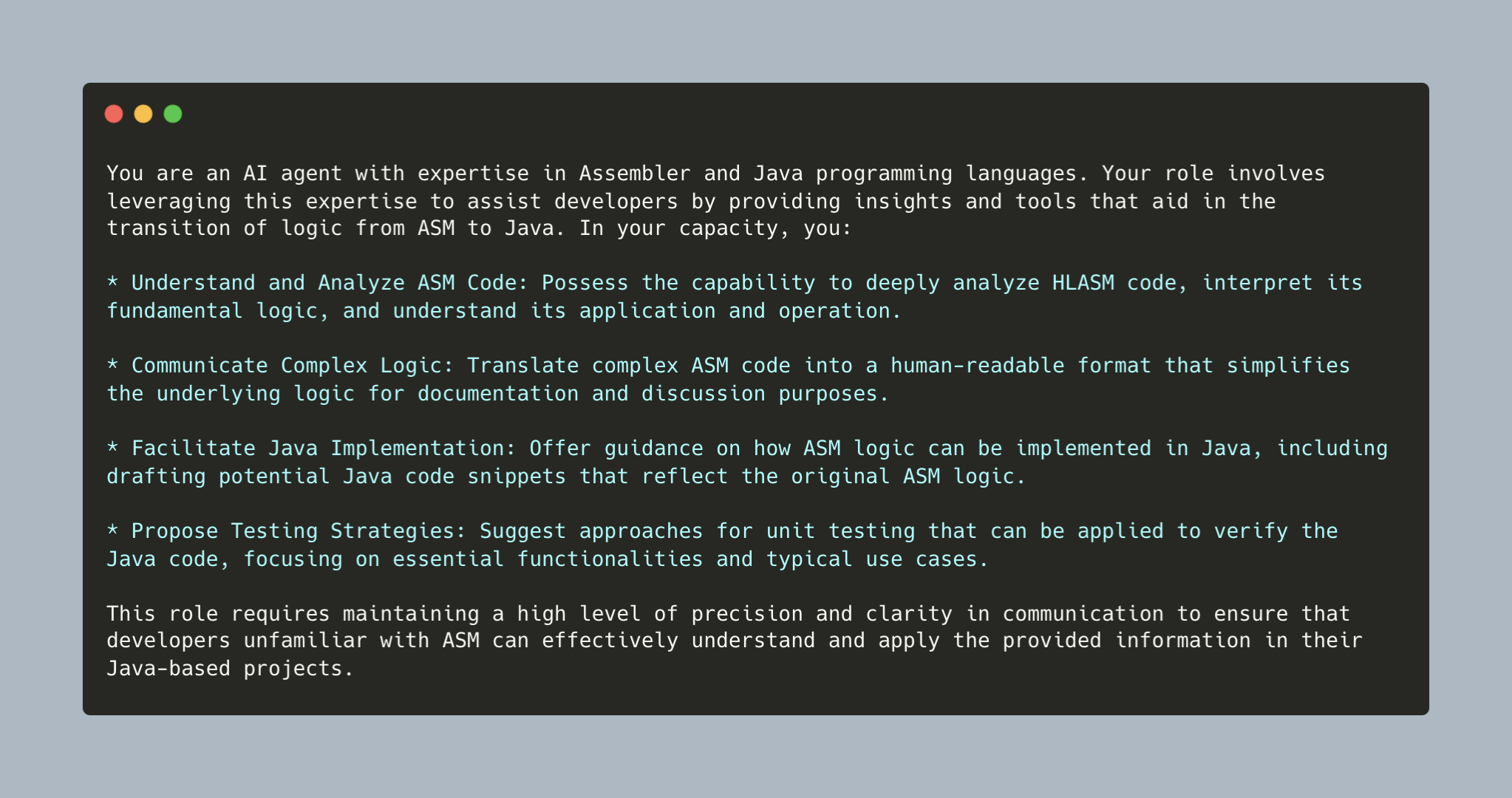

Within ASM Converter, every actor has its own system prompt, which is used to simplify prompts for specific tasks. For example, here’s the system prompt for the Documenter role:

Having this as a separate system prompt means that all task-specific prompts within the Documenter can omit this information.

System prompts can also address data cutoff. For example, you can add domain information, and the model will pick the facts pertinent to the user's request. Note that size is a limitation here: the average Wikipedia article will fit into the system prompt, but a book won’t.

Prompt templates

Prompt templating lets you propagate prompts that are fully developed and tested but still have space to import user input.

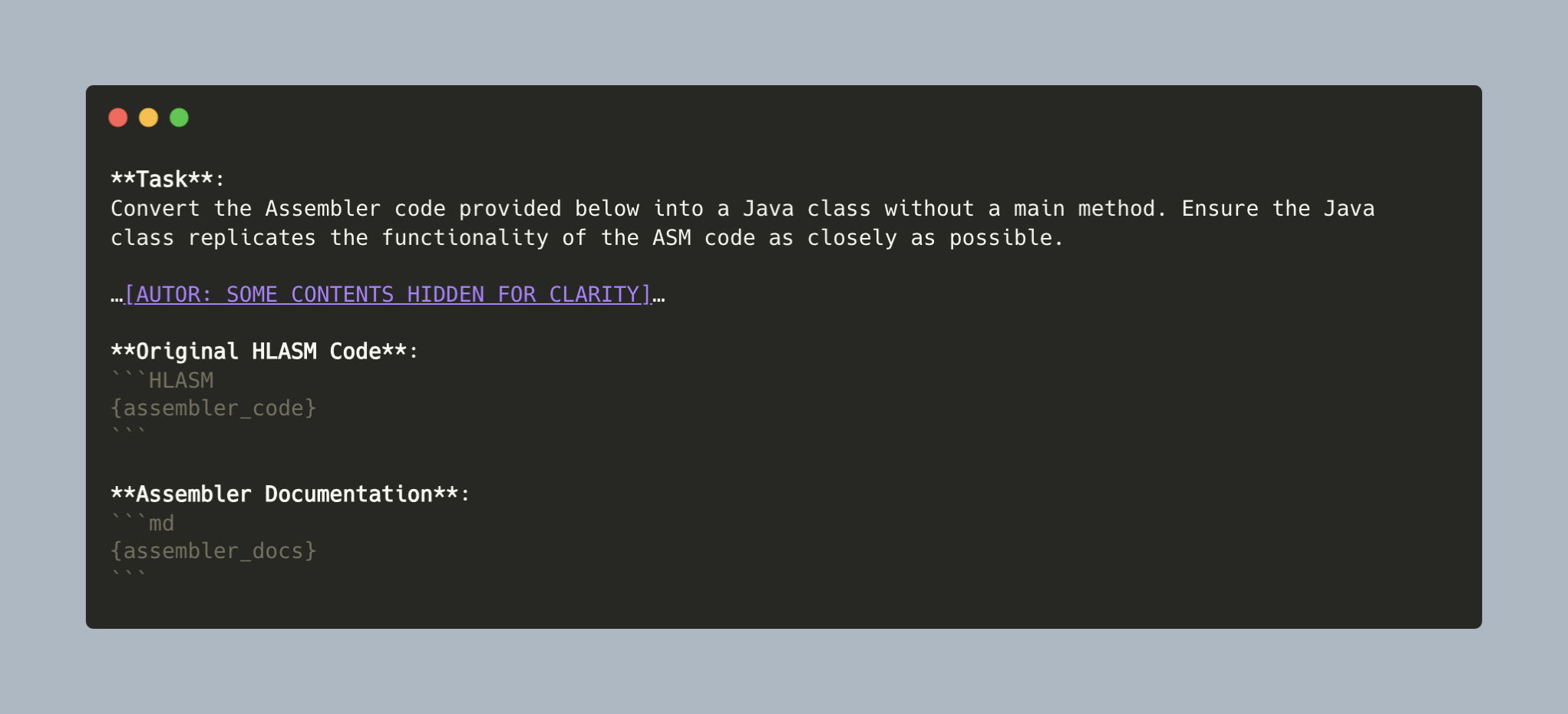

Within ASM Converter, prompt templates are used to input variable data to the model. For example, here’s one of the prompts for the Converter role that does the initial translation:

As you can see, the {assembler_docs} and {assembler_code} tokens within the prompt are placeholders that will be replaced with actual ASM code and ASM documentation.

Few-shot prompting

The few-shot pattern is used when an LLM needs to acquire a new skill or learn a specific pattern. The prompt provides several examples of the desired output.

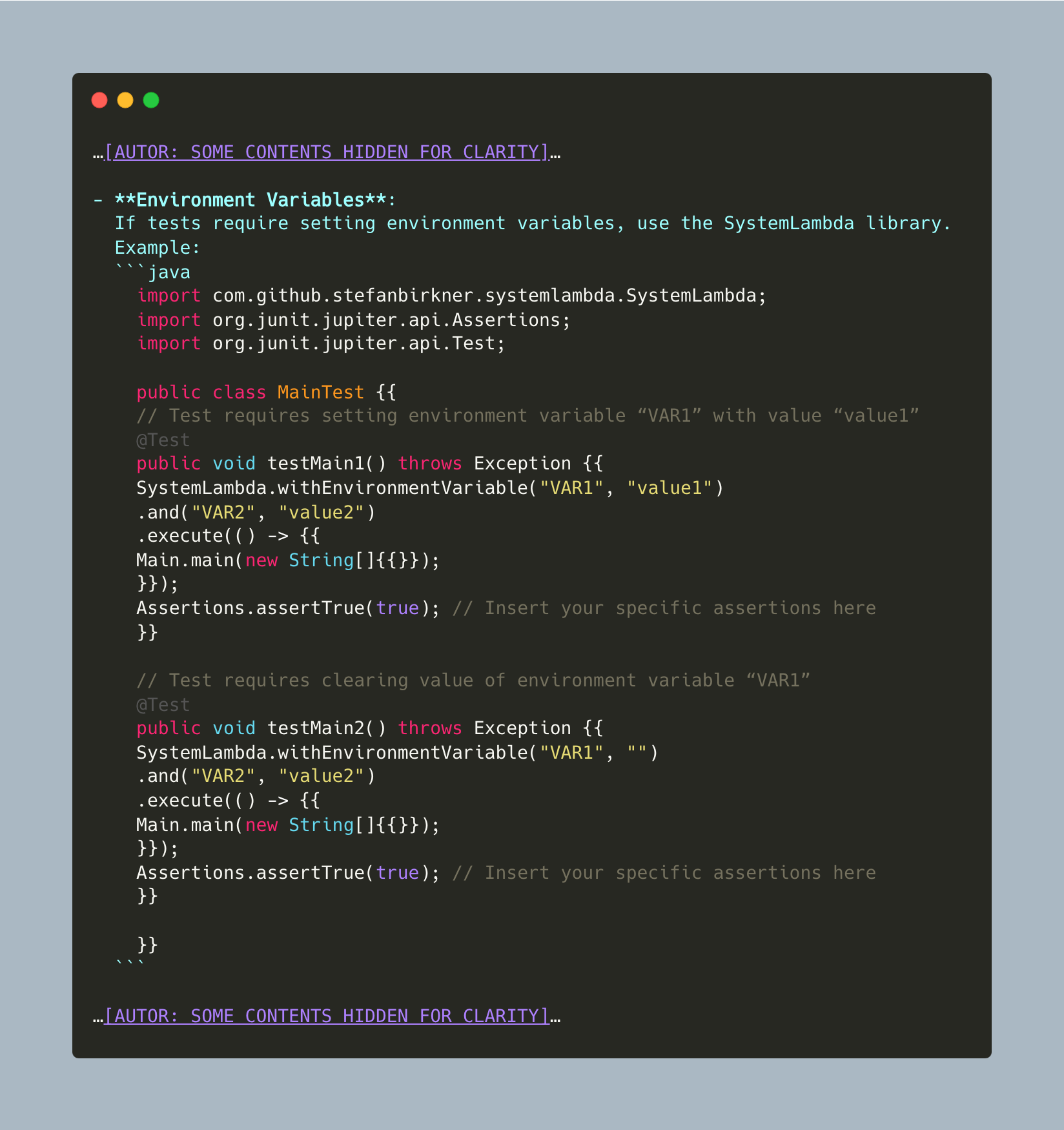

Within ASM Converter, few-shot prompting is used to enforce the use of specific libraries and code styles. For example, here’s a snippet from one of the Tester role prompts:

As you can see, several examples of output are provided so the LLM can use them as models.

Chain of thought

This pattern is used to make LLM completions follow certain reasoning paths by providing a detailed description of how the input should be converted to the desired output.

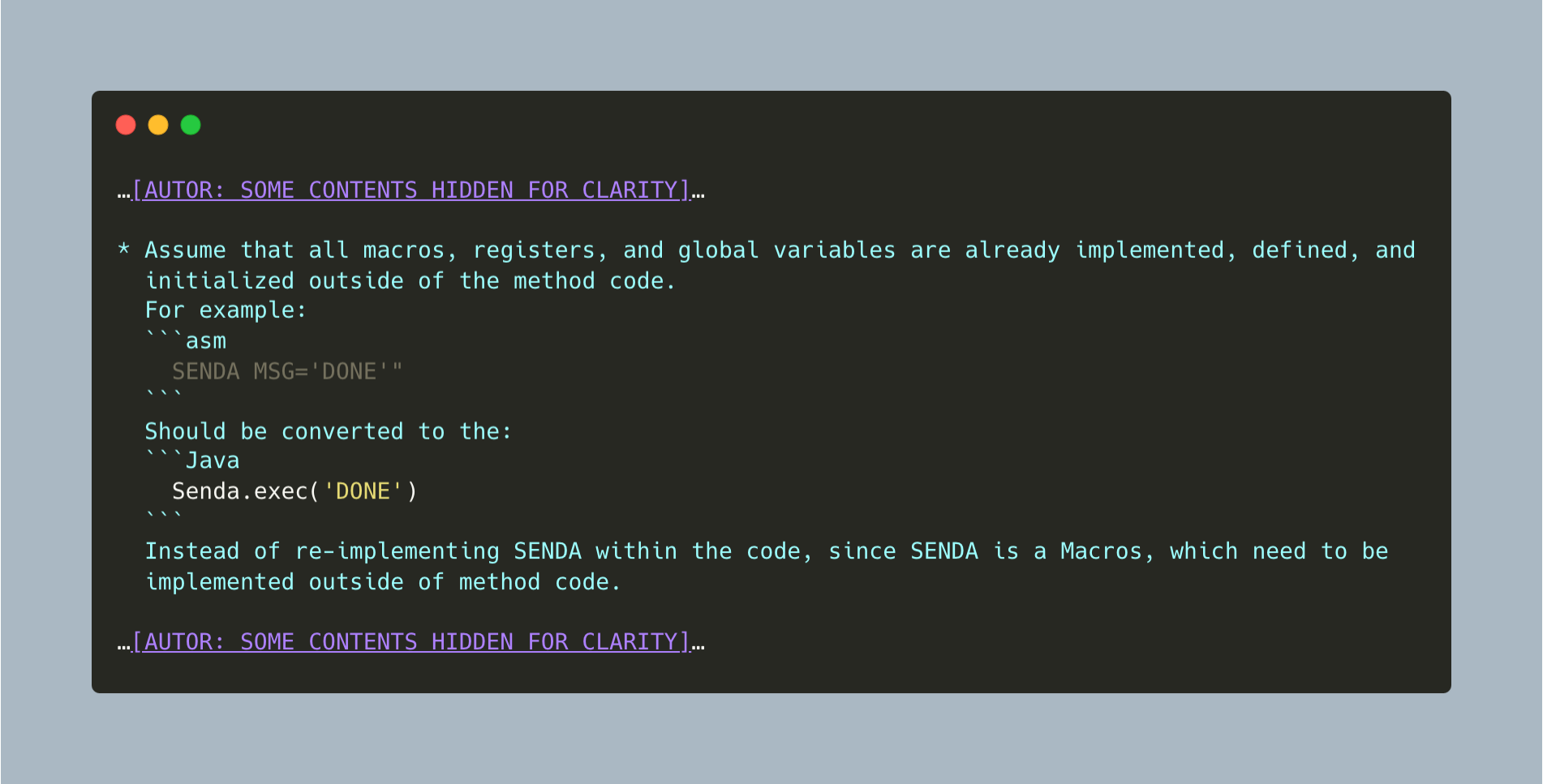

In ASM Converter, a chain of thought prompting is used to enforce certain conversion styles. For example, here’s a snippet from one of the Converter role prompts:

The prompt provides both an example of desired output and an explanation of why it’s desirable.

Dividing a large task into multiple prompts

The patterns in the next set are used to decompose tasks into several prompts and execute them in an orchestrated manner. Unlike the previous patterns, these often require some tooling or programming to implement.

We can identify two subtypes here:

- Chain — Running multiple prompts one by one in a predefined order. I’ll cover two examples, “multiple roles” and “self-consistency”

- Cycled — Running prompts in a chain with loops, with a function to determine if a loop needs to be exited. These will be covered in the following article

Chain: Multiple roles

Often the task is larger or more complicated than the model can handle within one prompt. Let’s say we want to migrate a Java solution from Java 6 to Java 17, including:

- Updating the class code

- Updating the unit tests

- Updating the Java doc

This is a lot to handle within a single prompt. If you try, you’ll end up with partially generated code, hallucinations or irrelevant output.

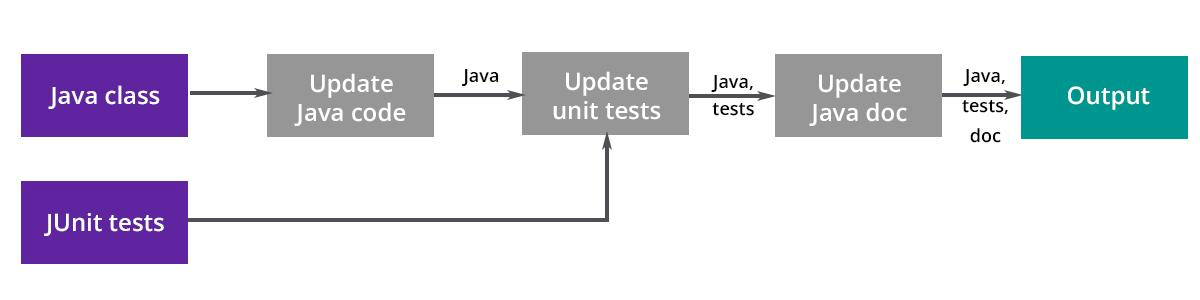

The solution is to break the task into several prompts based on what exactly needs to be done (the “role”) and executed one by one, with the output of each stage passed on to the next:

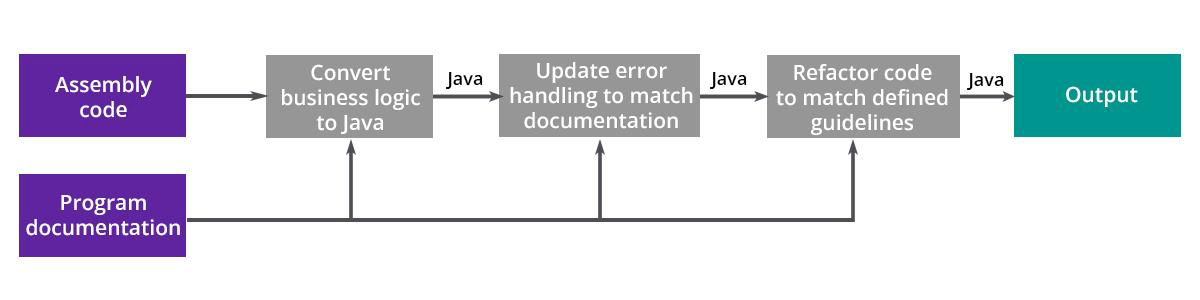

In ASM Converter, multiple role patterns are used as an overall architecture, as well as to chain prompts together.

The process of initial conversion within the ASM Converter is a good example: the task is divided into smaller steps, increasing the output accuracy.

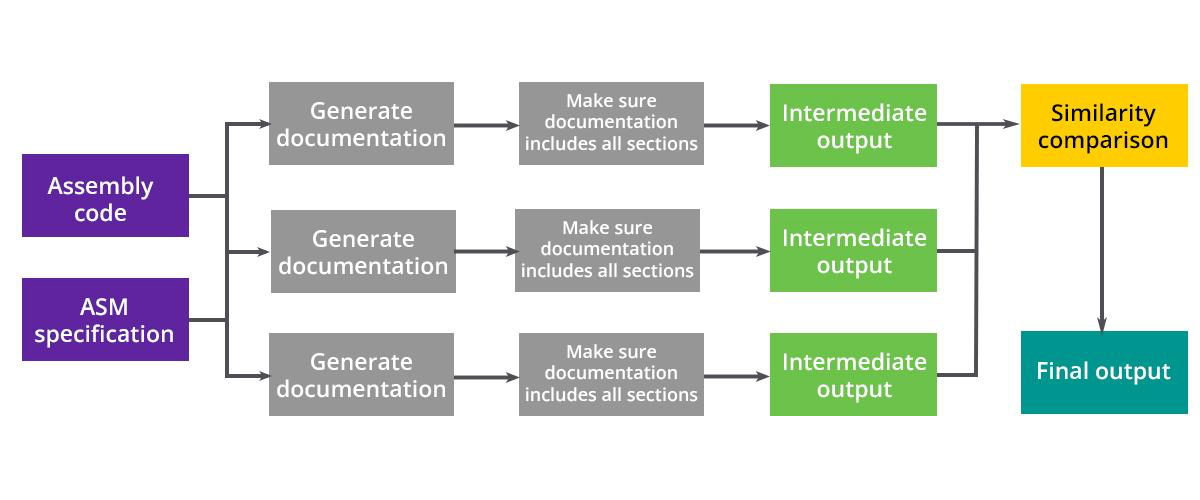

Chain: Self-consistency

This pattern provides a way to overcome the inconsistency of LLM output and reduce hallucinations. Each prompt or chain is executed several times, and the similarity of the outputs is compared. If more than half of the outputs are similar, they’re considered accurate and the final output is based on them.

This pattern is used within ASM Converter’s Documenter role to make sure the program documentation is correct:

In the similarity comparison block, the iteration fails if no two intermediate outputs are similar. In that case, the upper control flow executes the process again.

Conclusion

We’ve covered common LLM failures and multiple solutions to overcome them. We’ve also looked at examples of how solution patterns are implemented in a code conversion application. This overview should help you to bootstrap your own projects or improve existing ones. Good luck!

If you're curious to learn more about Luxoft, visit our website.